Module 0.2 - Models and Modules¶

Module 0.2¶

Models and Modules

Class Note¶

- You need to link your GitHub account

- Still several students with unlinked accounts

Functional Programming¶

Function Type¶

In [2]:

def add(a: float, b: float) -> float:

return a + b

def mul(a: float, b: float) -> float:

return a * b

v: Callable[[float, float], float] = add

Functions as Arguments¶

In [3]:

from typing import Callable, Iterable

def combine3(

fn: Callable[[float, float], float], a: float, b: float, c: float

) -> float:

return fn(fn(a, b), c)

print(combine3(add, 1, 3, 5))

print(combine3(mul, 1, 3, 5))

9 15

Functional Python¶

Functions as Returns

In [4]:

def combine3(

fn: Callable[[float, float], float],

) -> Callable[[float, float, float], float]:

def new_fn(a: float, b: float, c: float) -> float:

return fn(fn(a, b), c)

return new_fn

In [5]:

add3: Callable[[float, float, float], float] = combine3(add)

mul3: Callable[[float, float, float], float] = combine3(mul)

print(add3(1, 3, 5))

9

Higher-order Filter¶

Extended example

In [6]:

def filter(fn: Callable[[float], bool]) -> Callable[[Iterable[float]], Iterable[float]]:

def apply(ls: Iterable[float]):

ret = []

for x in ls:

if fn(x):

ret.append(x)

return ret

return apply

Higher-order Filter¶

Extended example

In [7]:

def more_than_4(x: float) -> bool:

return x > 4

filter_for_more_than_4: Callable[[Iterable[float]], Iterable[float]] = filter(

more_than_4

)

filter_for_more_than_4([1, 10, 3, 5])

Out[7]:

[10, 5]

Functional Python¶

Rules of Thumbs:

- When in doubt, write out defs

- Document the arguments that functions take and send

- Write tests in for loops to sanity check

Quiz¶

Outline¶

- Modules

- Visualization

- Datasets

Modules¶

Model¶

Models: parameterized functions.

- $m(x; \theta)$

- $x \text{ - input}$

- $m \text{ - model}$

Initial Focus:

- $\theta \text{ - parameters}$

Parameters¶

- Anything learned is in the parameters.

- Modern parameters sets are both:

- Large

- Complex

Growth in Parameter Size¶

Specifying Parameters¶

- Datastructures to specify parameters

- Requirements

- Independent of implementation

- Compositional

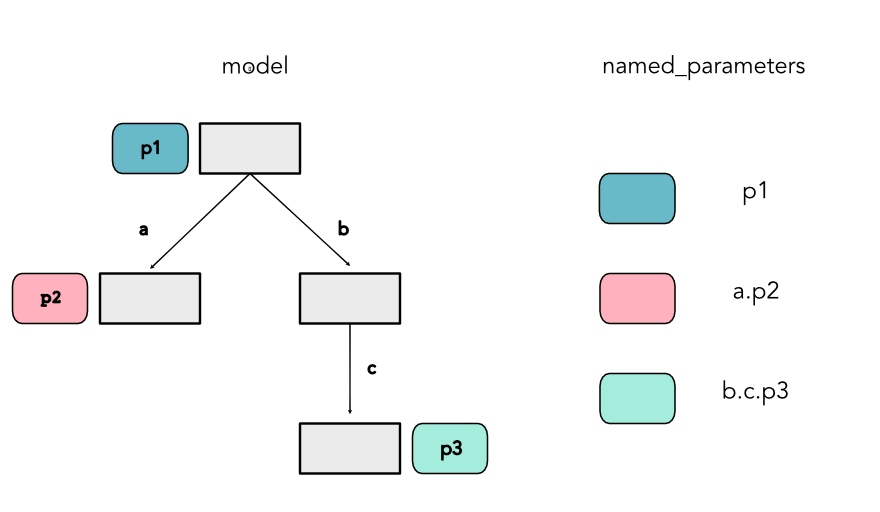

Module Trees¶

- Each Module owns a set of parameters

- Each Module owns a set of submodules

Module Trees¶

Benefits

- Can extract all parameters without knowing about Modules

- Can use mix and match Modules for different tasks

Downsides

- Verbose, repeats some functionality of declaration and use.

Module Example¶

In [8]:

class OtherModule(Module):

def __init__(self):

# Must initialize the super class!

super().__init__()

self.uncool_parameter = Parameter(60)

class MyModule(Module):

def __init__(self):

# Must initialize the super class!

super().__init__()

# Type 1, a parameter.

self.parameter1 = Parameter(15)

self.cool_parameter = Parameter(50)

# Type 2, user data

self.data = 25

# Type 3. another Module

self.sub_module_a = OtherModule()

self.sub_module_b = OtherModule()

Parameters¶

- Everything that is learned in the model

- Controlled and changed outside the class

Submodules¶

- Other modules that are called

- Store their own parameters and submodules

- Together forms a tree

Everything Else¶

- Modules act mostly like standard python objects

- You can have additional information stored

Module Example¶

In [9]:

MyModule().named_parameters()

Out[9]:

[('parameter1', 15),

('cool_parameter', 50),

('sub_module_a.uncool_parameter', 60),

('sub_module_b.uncool_parameter', 60)]

Extended Example¶

In [10]:

class Module2(Module):

def __init__(self):

super().__init__()

self.p2 = Parameter(10)

class Module3(Module):

def __init__(self):

super().__init__()

self.c = Module4()

class Module4(Module):

def __init__(self):

super().__init__()

self.p3 = Parameter(15)

Extended Example¶

In [11]:

class Module1(Module):

def __init__(self):

super().__init__()

self.p1 = Parameter(5)

self.a = Module2()

self.b = Module3()

Module1().named_parameters()

Out[11]:

[('p1', 5), ('a.p2', 10), ('b.c.p3', 15)]

How does this work?¶

- Internally

Modulespies to findParameterandModuleobjects - A list is stored internally.

- Implemented through Python magic methods

Detail: Magic Methods¶

- Any method that starts and ends with

__ - Used to override default behavior of the language.

- We will use for many things, including operator overloading

Interception Code¶

Module construction

In [12]:

class MyModule(Module):

def __setattr__(self, key, val):

if isinstance(val, Parameter):

self.__dict__["_parameters"][key] = val

elif isinstance(val, Module):

self.__dict__["_modules"][key] = val

else:

super().__setattr__(key, val)

Parameter Naming¶

- Every parameter in a model has a unique name.

- Naming is determined by walking the tree.

- Names are prefixed by the path from the root.

Module Naming¶

Other Module Metadata¶

- Other information can be communicated through the tree.

- Common example: Is the model in train or test mode?

Homework Note¶

- Must be recursive implementation

- Have to walk the full tree

- (Companies love this as an interview question!)

Real World Examples¶

Code for language modeling

In [13]:

from torch import nn

class Block(nn.Module):

def __init__(self, n_ctx, config, scale=False):

super().__init__()

hidden_size = config.n_embd

inner_dim = config.n_inner if config.n_inner is not None else 4 * hidden_size

self.ln_1 = nn.LayerNorm(hidden_size, eps=config.layer_norm_epsilon)

self.attn = Attention(hidden_size, n_ctx, config, scale)

self.ln_2 = nn.LayerNorm(hidden_size, eps=config.layer_norm_epsilon)

if config.add_cross_attention:

self.crossattention = Attention(

hidden_size, n_ctx, config, scale, is_cross_attention=True

)

self.ln_cross_attn = nn.LayerNorm(

hidden_size, eps=config.layer_norm_epsilon

)

self.mlp = MLP(inner_dim, config)

GPT 2¶

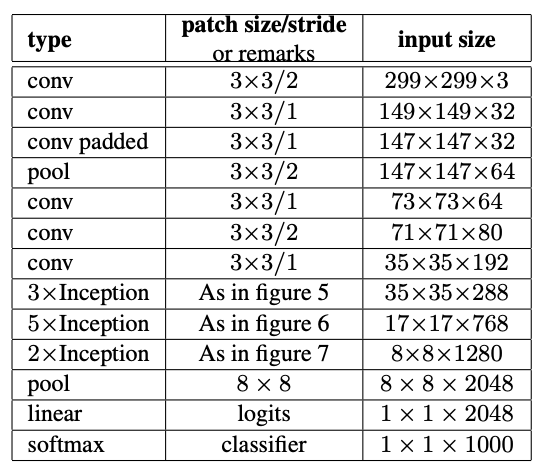

Real World Examples¶

Block from image classification

In [14]:

class Inception3(nn.Module):

def __init__(

self,

num_classes=1000,

aux_logits=True,

transform_input=False,

inception_blocks=None,

init_weights=None,

):

super(Inception3, self).__init__()

...

self.aux_logits = aux_logits

self.transform_input = transform_input

self.Conv2d_1a_3x3 = conv_block(3, 32, kernel_size=3, stride=2)

self.Conv2d_2a_3x3 = conv_block(32, 32, kernel_size=3)

self.Conv2d_2b_3x3 = conv_block(32, 64, kernel_size=3, padding=1)

self.maxpool1 = nn.MaxPool2d(kernel_size=3, stride=2)

self.Conv2d_3b_1x1 = conv_block(64, 80, kernel_size=1)

self.Conv2d_4a_3x3 = conv_block(80, 192, kernel_size=3)

self.maxpool2 = nn.MaxPool2d(kernel_size=3, stride=2)

self.Mixed_5b = inception_a(192, pool_features=32)

self.Mixed_5c = inception_a(256, pool_features=64)

self.Mixed_5d = inception_a(288, pool_features=64)

self.Mixed_6a = inception_b(288)

self.Mixed_6b = inception_c(768, channels_7x7=128)

self.Mixed_6c = inception_c(768, channels_7x7=160)

self.Mixed_6d = inception_c(768, channels_7x7=160)

self.Mixed_6e = inception_c(768, channels_7x7=192)

if aux_logits:

self.AuxLogits = inception_aux(768, num_classes)

self.Mixed_7a = inception_d(768)

self.Mixed_7b = inception_e(1280)

self.Mixed_7c = inception_e(2048)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.dropout = nn.Dropout()

self.fc = nn.Linear(2048, num_classes)

Visualization¶

Main Idea¶

- Show properties of your model as you code

- See real time graphs as you train models

- Make convincing figures of your full system

Code Snippet¶

Streamlit windows

import streamlit as st

st.write("## Sandbox for Model Training")

...

st.plotly_chart(fig)

Gotchas¶

- Changes to the visualization code will autoupdate

- Changes to the library will not autoupdate

Datasets¶

Sneak Preview¶

- Task 0.5: Intro to our first ML problem

- Basic separation of points on a graph

- Manual classifier

Datasets¶

- Simple

- Diag

- Split

- Xor

Parameter Knobs¶

- W1

- W2

- Bias