Broadcasting¶

Broadcasting makes tensors convenient and efficient to use, which comes in handy particularly for zip operations. So far all of our zip operations assume two input tensors of exactly the same size and shape. However there are many interesting cases to zip two tensors of different size.

Perhaps the simplest case is we have a vector of size 3 and want to add a scalar constant to every position:

# In math notation, vector1 + 10

vector1 + tensor([10])

Intuitively, we would like to interpret this expression as the standard vector+scalar: adding 10 to each position. However, the above operation will fail because of shape mistach: we are adding a tensor of shape(1,) to vector1 which has shape (3,).

We could ask users to create a tensor of the same shape instead, but it is both annoying and, more importantly, inefficient:

vector1 + tensor([10, 10, 10])

Broadcasting is a protocol that allows us to automatically interpret the frist expression as implying the second one. Inside zip, we pretend that 10 is a vector of shape (3,) when zipping it with a vector of shape (3,). Again, this is just an interpretation: we never actually create this vector.

This gives us the first rule of broadcasting:

Note

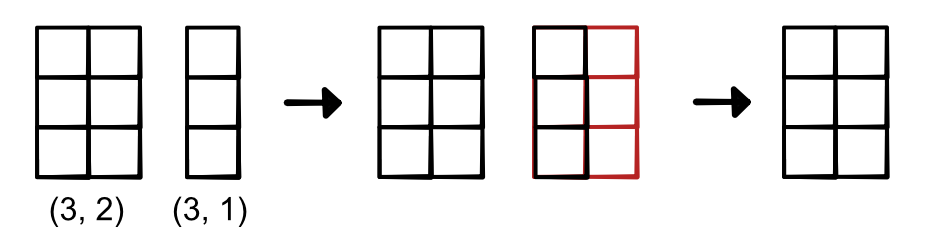

Rule 1: Any dimension of size 1 can be zipped with dimensions of size n > 1 by assuming the dimension is copied n times.

Now let's apply this approach to a matrix of shape (4, 3):

matrix1 + tensor([10])

Here we are trying to zip a matrix (2-D) of shape (4, 3) with a vector (1-D) of shape (1,). Here we are not just off on the shape, but also on the number of dimensions.

However, recall that adding an extra dimension of shape-1 doesn't change the size of the tensor. Therefore we can allow our protocol to add these in. Here if we add an empty dimension and then apply rule 1 twice, we can interpret the above expression as an efficient version of:

matrix1 + tensor([10] * 12, shape=(4, 3))

Note

Rule 2: Extra dimensions of shape 1 can be added to a tensor to ensure the same number of dimensions with another tensor.

Finally, there is a question of where to add the empty dimension. This is not an issue in the above example but could become an issue in more complicated cases. Thus we introduce another rule:

Note

Rule 3: Any extra dimension of size 1 can only be implicitly added on the left side of the shape.

This rule has the impact of making the process easy to follow and replicate. You always know what the shape of the final output will be. For example:

# This will fail: mismatch of (4, 3) and (4,)

matrix1 + vector2.view(4)

# These two expression are equivalent

matrix1 + vector1

matrix1 + vector1.view(1, 3)

We can apply broadcasting as many times as we want:

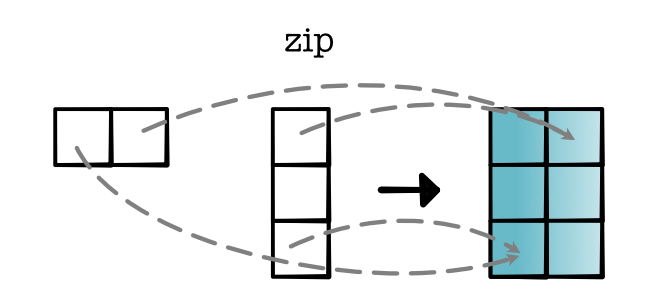

# The result has shape (3, 2)

tensor1.view(1, 2) + tensor2.view(3, 1)

Here is a more complicated example:

# The result has shape (7, 2, 3, 5)

tensor1.view(1, 2, 3, 1) + tensor2.view(7, 2, 1, 5)

We end this guide with two important notes:

Broadcasting is only about shapes. It does not take strides into account in any way. It is purely a high-level protocol.

Broadcasting has some impact on the backward pass. We will discuss some in the code base, but it is not required for any of the tasks.

Examples¶

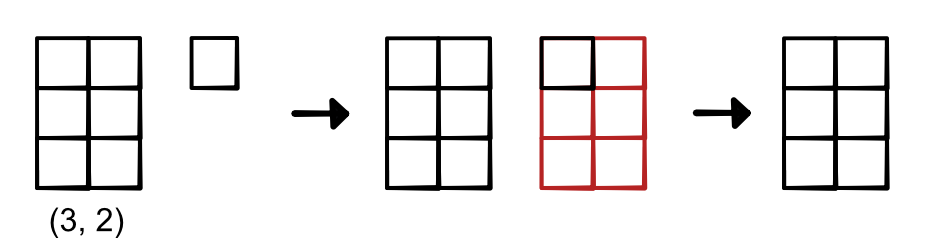

Tensor-Scalar operations can be easily written using broadcasting for tensors of any dimension.

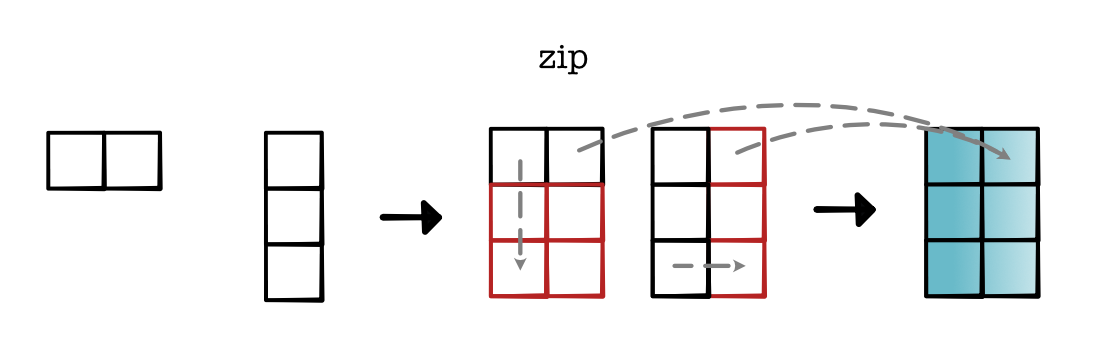

Matrix-vector operations can be written using broadcasting, but you need to be careful to make sure that the vector is shaped such the the dimensions align. This can be done with view calls.

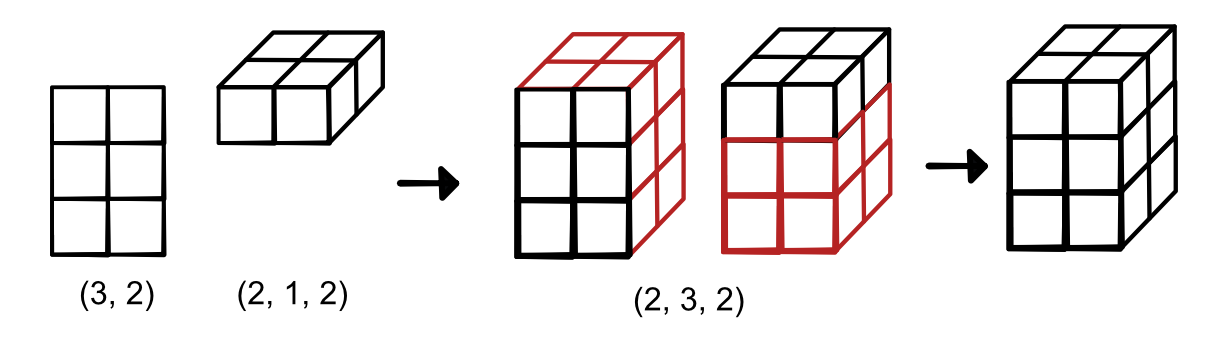

Matrix-matrix operations can be written using broadcasting even when the dimensions don't align. Here is an example of that process.

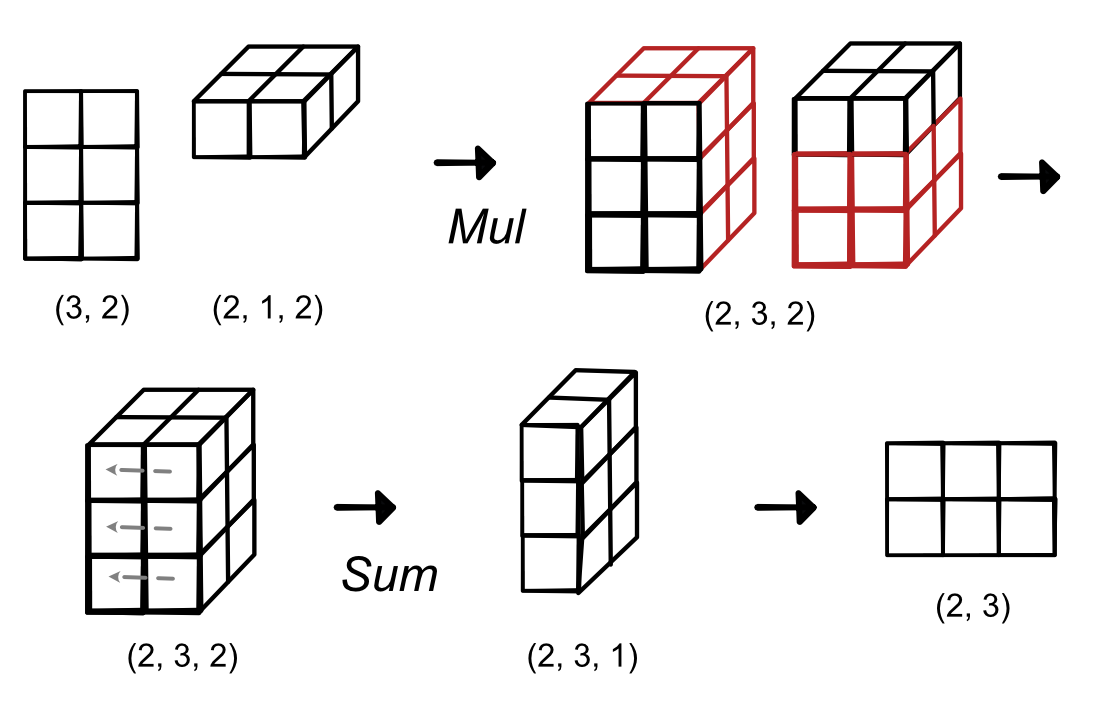

Matrix multiplication can be written in this style, here is \((B x A^T)\) where A is 3 x 2 and B is 2 x 2 . . (And you will need to use this for the assignment). However, note this is a memory inefficient way to do matrix multiplication, as it needs to create an intermediate tensor in the process.